AMD dunks on Nvidia with record-breaking new datacenter GPUs

AMD has unveiled a new line of datacenter GPUs that supposedly blow the competition out of the water for high-performance computing (HPC) workloads.

Successor to the AMD Instinct MI100 range, the Instinct MI200 series is said to deliver up to 4.9x better performance than Nvidia A100 GPUs in a supercomputing context, reaching 47.9 teraFLOPS. For AI training workloads, meanwhile, the most powerful MI200 SKU is 1.2x faster than competitive accelerators, according to internal testing.

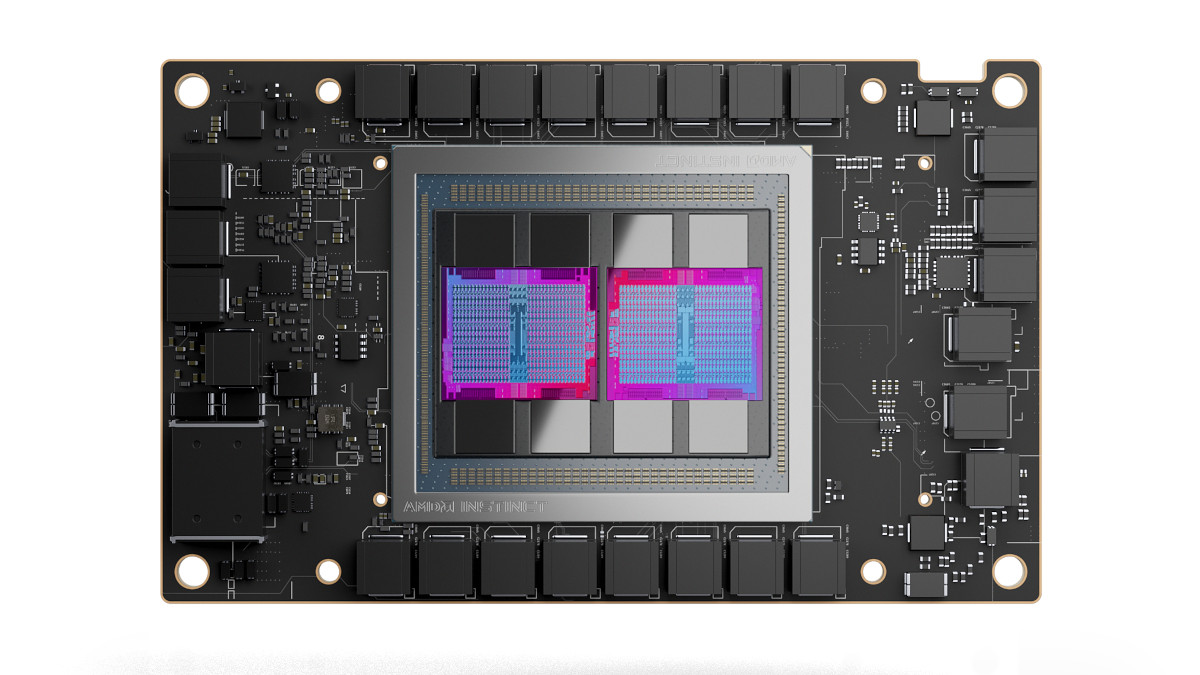

The accelerators are built on new AMD CDNA 2 architecture and also feature the world’s first multi-die GPU design, resulting in a record-breaking 3.2TB/second peak theoretical memory bandwidth (2.7x higher than the previous generation of Instinct GPUs).

During a briefing session, TechRadar Pro was told the MI200 series comes in two “distinct flavors”: the MI200 open accelerator module (OAM), purpose-built for the most demanding HPC and AI workloads, and the MI210 PCIe for traditional servers. The former is available now, with the latter soon to follow.

World’s fastest HPC GPU

Last year, AMD took the decision to split its range of GPUs into two channels: gaming and HPC. This bifurcation process allowed the company to hone in on the traits most important in each scenario, and gave rise to the Instinct MI100 range, which took the title of fastest HPC GPU.

Now, the company is aiming to build on this progress with the MI200 series, which will itself reportedly deliver a “multi-generational leap in performance” that will help accelerate research timelines and drive the convergence of AI and HPC workloads.

“AMD Instinct MI200 accelerators deliver leadership HPC and AI performance, helping scientists make generational leaps in research that can dramatically shorten the time between initial hypothesis and discovery,” explained Forrest Norrod, SVP and GM of AMD’s data center branch.

“With key innovations in architecture, packaging and system design, the AMD Instinct MI200 series accelerators are the most advanced datacenter GPUs ever, providing exceptional performance for supercomputers and datacenters to solve the world’s most complex problems.”

The first Instinct MI200 GPUs will appear in the upcoming Frontier supercomputer, the first exascale machine to come out of the US. Hosted at the Oak Ridge National Laboratory (ORNL), Frontier is expected to deliver up to 1.5 exaFLOPS of peak performance and will open to scientists next year.

Let’s not forget EPYC

Although the Instinct MI200 announcement headlined the briefing, AMD also took the opportunity to unveil a new iteration of its third generation EPYC CPUs, codenamed Milan-X.

The new server chips improve upon AMD’s Milan CPUs, launched in March, with the introduction of 3D chiplet technology, developed in partnership with TSMC. In short, CPU components like the logic unit and cache memory are stacked on top of each other, utilizing vertical space rather than expanding the total surface area of the chip.

Courtesy of this new technology, Milan-X CPUs offer 3x more L3 cache than previous third-gen EPYC, with a 50% average performance uplift across targeted workloads.

Meanwhile, Milan-X-based servers are said to offer a 66% performance increase over base Milan CPUs, making these the fastest server processors on the market for technical computing workloads.

The new range of EPYC CPUs with 3D V-Cache will launch in Q1 next year.

Also check out our list of the best dedicated server hosting, best bare metal hosting, and best VPS hosting providers.

from TechRadar - All the latest technology news https://ift.tt/3qqQ4mS

No comments