Why you don’t really know what you know

In July, Joseph Giaime, a physics professor at Louisiana State University and Caltech, gave me a tour of one of the most complex science experiments in the world. He did it via Zoom on his iPad. He showed me a control room of LIGO, a large physics collaboration based in Louisiana and Washington state. In 2015, LIGO was the first project to directly detect gravitational waves, created by the collision of two black holes 1.3 billion light-years away.

About 30 large monitors displayed various aspects of LIGO’s status. The system monitors tens of thousands of data channels in real time. Video screens portrayed light scattering off optics, and data charts depicted instrument vibrations from seismic activity and human movement.

I was visiting this complicated operation, on which hundreds of specialists in discrete scientific subfields work together, to try to answer a seemingly simple question: What does it really mean to know anything? How well can we understand the world when so much of our knowledge relies on evidence and argument provided by others?

The question matters not only to scientists. Many other fields are becoming more complex, and we have access to far more information and informed opinions than ever before. Yet at the same time, increasing political polarization and misinformation are making it hard to know whom or what to trust. Medical advances, political discourse, management practice, and a good deal of daily life all ride on how we evaluate and distribute knowledge.

We overstate enormously the individual’s ability to amass knowledge, and understate society’s role in possessing it. You may know that diesel fuel is bad for gas engines and that plants use photosynthesis, but can you define diesel or explain photosynthesis, let alone prove photosynthesis happens? Knowledge, as I came to recognize while researching this article, depends as much on trust and relationships as it does on textbooks and observations.

Thirty-five years ago, the philosopher John Hardwig published a paper on what he called “epistemic dependence,” our reliance on others’ knowledge. The paper—well-cited in some academic circles but largely unknown elsewhere—only grows in relevance as society and knowledge become more complex.

One common definition of knowledge is “justified true belief”—facts you can support with data and logic. As individuals, though, we rarely have the time or skills to justify our own beliefs. So what do we really mean when we say we know something? Hardwig posed a dilemma: Either much of our knowledge can be held only by a collective, not an individual, or individuals can “know” things they don’t really understand. (He chose the second option.)

You may know that diesel fuel is bad for gas engines and that plants use photosynthesis, but can you define diesel or explain photosynthesis, let alone prove photosynthesis happens?

This might seem like an abstract philosophical question. At the end of the day, whatever “knowing” means, it’s clear we rely on other people for it. “If the fundamental question is ‘Who has the knowledge?’—nothing rides on that. And I don’t really care,” says Steven Sloman, a cognitive scientist at Brown University and coauthor of The Knowledge Illusion.

“But,” he goes on, “if the question is ‘How are we justified in claiming we know things?’ and ‘Whom should we trust?’” then the matter is an urgent one. The retraction in June of two papers on covid-19 in the Lancet and the New England Journal of Medicine, after researchers put too much trust in a dishonest collaborator, is an example of what happens when epistemic dependence is mishandled. And the rise of misinformation about issues like vaccines, climate change, and covid-19 is a direct attack on epistemic dependence, without which neither science nor society as a whole can function.

To better understand epistemic dependence, I looked at an extreme case: LIGO. I wanted to understand how the physicists who work there “know” that those two black holes collided several galaxies away, and what it means for how any of us knows anything.

As Giaime tells it, LIGO’s story begins with Albert Einstein. A century ago, Einstein theorized that gravity is a warping of the spacetime continuum, and argued that masses in motion send out ripples at the speed of light. But hopes of detecting such waves remained dim for decades, because they would be too small to measure.

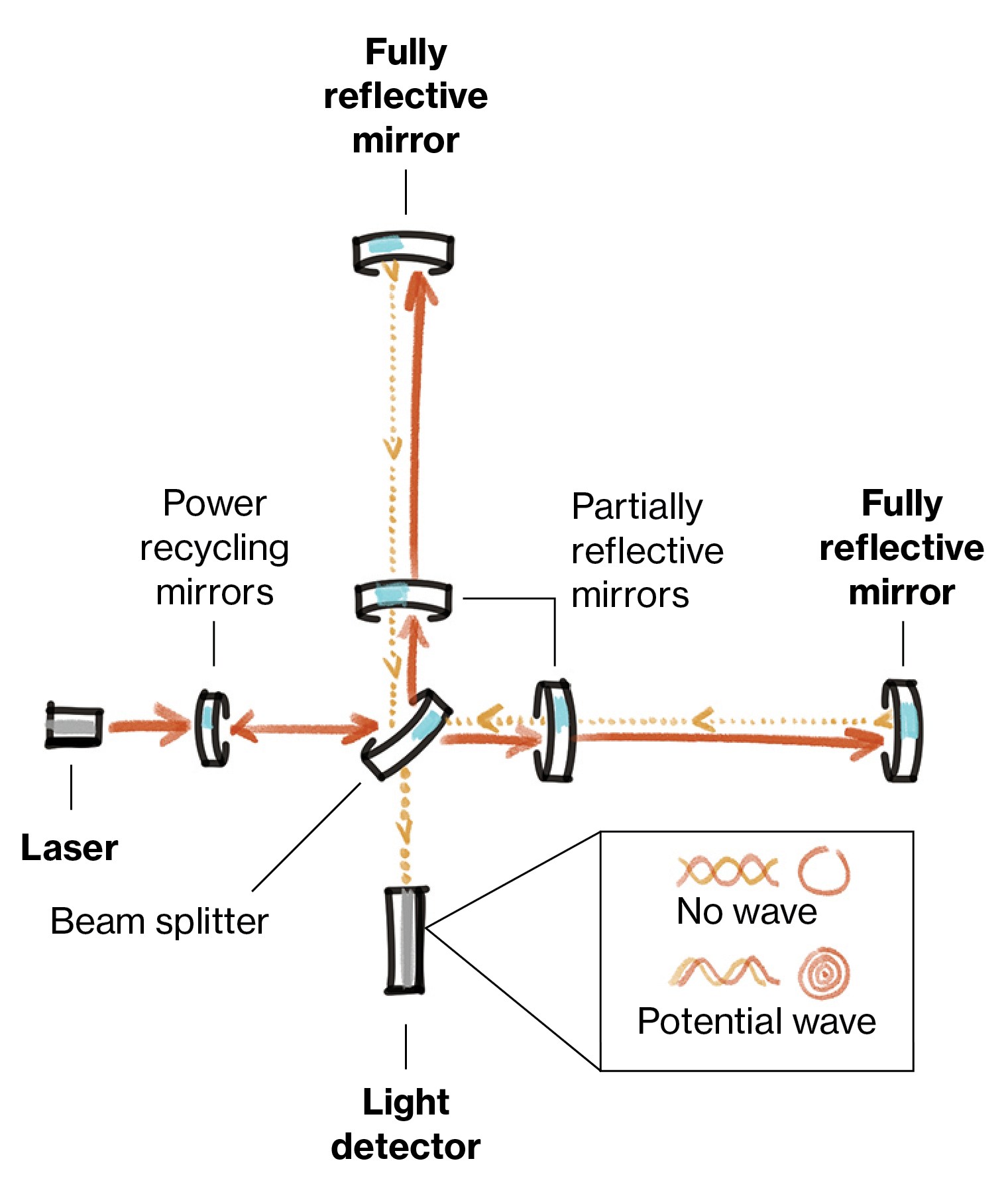

LIGO uses laser interferometry, based on a design the MIT physicist Rainer Weiss published in 1972. An interferometer, from above, resembles a capital L, with two arms at a right angle. A laser injected at the elbow of the L is split in two, reflects off a mirror at the end of each arm, and recombines in such a way that the peaks and valleys of the light waves cancel each other out.

The Laser Interferometer Gravitational-Wave Observatory (LIGO) is based on a design published by MIT physicist Rainer Weiss in 1972. A laser is injected at the elbow of the L, splitting in two and bouncing off mirrors at the end of each 4-kilometer- long arm. When they recombine, the peaks and valleys of the light waves cancel each other out. The theory was that gravity waves, if they existed, would cause the waves to desynchronize.

Weiss knew that as a gravity wave passed, it would stretch space in the direction of one arm while contracting it in the direction of the other. As a result, the distances traveled by the laser beams would change, and the waves would fail to cancel each other out. The light detector would then see a clear wave pattern. After decades of construction and more than a billion dollars, that’s what LIGO—the Laser Interferometer Gravitational-Wave Observatory—has officially detected nearly a dozen times since 2015.

The instrument’s sensitivity is hard to fathom. Each arm is four kilometers long. Over that distance, LIGO can detect changes as small as one-ten-thousandth the diameter of a proton. “The more physics and engineering you know,” Giaime told me, “the more crazy that sounds.”

That’s smaller than the random jiggle in the molecules of the mirror, so a number of tricks are used to cut down on noise. The light travels down the tunnel through a vacuum. The laser is powerful, so the beam contains a lot of photons, letting them average out any noise. The mirrors hang from glass threads to passively dampen any vibrations. And each mirror suspension is mounted on a rig that actively quiets vibrations using feedback from seismometers and motion sensors—like extravagant noise-canceling headphones. The system also accounts for measured interference from magnetic fields, the weather, the electrical grid, and even cosmic rays.

Still, with only one detector, you can be only so sure that any signal is coming from space. If two detectors receive the same signal at nearly the same time, confidence increases exponentially. You also can start to localize the source in the sky. That’s why there are two LIGO stations, in Louisiana and Washington, as well as other gravitational-wave observatories: Virgo, in Italy, and GEO600, in Germany, with another being built in Japan.

As you might imagine, LIGO requires a big team with varying skills. The division of labor in science—as in industry—has grown ever finer. A 1786 book on experimental physics covered astronomy, geology, zoology, medicine, and botany. A reader could master the bulk of human knowledge in all those areas. They are each now their own fields, each of which has sprouted subfields. Encyclopedic expertise has become untenable.

Accomplishing anything outside a narrow field requires scientists to share skills. Collaborations have grown as new technologies like the internet have made it easier to communicate. From 1990 to 2010, the average number of coauthors on a scientific paper increased from 3.2 to 5.6. A 2015 paper on the mass of the Higgs boson boasted more than 5,000 authors. Even lone authors don’t work alone—they cite work by others they often haven’t even read, according to Sloman: “We’re trusting that the abstract is actually a summary of what’s in the paper.”

The paper announcing LIGO’s first detection of gravitational waves, published in 2016, had more than 1,000 authors. Do all of them fully understand every aspect of what they wrote? “I think a lot of people have gotten their heads around most of it at a very high level,” David Reitze, a Caltech physicist and LIGO’s executive director, said of the team’s findings. But the practical matter of “How do you know that this complex detector that has hundreds of thousands of components and electronics and data channels is behaving properly and actually measuring what you’re thinking we’re measuring?” In that case, he said, “Hundreds of people”—as a team—“have to worry about that.”

I asked Reitze if he’d have trouble explaining any aspects of the 2016 paper. “There are certainly pieces of that paper that I don’t feel like I have enough detailed knowledge to reproduce,” he said—for instance, the team’s computational work comparing their data with theoretical predictions and nailing down the black holes’ masses and velocities.

Giaime, the head of the Livingston operation, guesses that fewer than half the coauthors of the paper ever set foot in one of the observatory sites, because their role didn’t require it. To justify the observatory’s results, he noted, a person would need to understand aspects of physics, astronomy, electronics, and mechanical engineering. “Is there anybody who knows all of those things?” he said. “We almost had a leak in our beam tube because of something called microbial induced corrosion, which is biology, for Pete’s sake. It gets to be a bit much for one mind to keep track of.”

One episode in particular emphasizes the team’s interdependence. LIGO detected no gravitational waves in its first eight years of operation, and from 2010 to 2015 it shut down for upgrades. Just two days after being rebooted, it received a signal that was “so beautiful that either it had to be a wonderful gift or it was suspicious,” says Peter Saulson, a physicist at Syracuse University, who led the LIGO Scientific Collaboration—the international team of scientists who use LIGO and GEO600 for research—from 2003 to 2007. Could someone have injected a fake signal? After an investigation, they concluded that no one person understood the whole system well enough to pull it off. A believable hack would have required a small army of malcontents. Imagining “such a team of evil geniuses,” Saulson says, “became laughable.” So, everyone conceded, the signal must be real—two black holes colliding. “In the end,” he says, “it was a sociological argument.”

We often overestimate our ability to explain things. It’s called the illusion of explanatory depth. In one set of studies, people rated how well they understood devices and natural phenomena, like zippers and rainbows. Then they tried to explain them. Ratings dropped precipitously once people had confronted their own ignorance. (For an amusing demonstration, ask someone to draw a bicycle. Results often don’t resemble reality.)

I asked Reitze if he himself had fallen prey to the illusion. He noted that LIGO relies on thousands of sensors and

hundreds of interacting feedback loops to account for environmental noise. He thought he understood them pretty well—until he prepared to explain them in a talk. A cram session on dynamical control theory—the mathematics of managing systems that change—ensued.

The illusion may draw on what Sloman, the cognitive scientist, calls “contagious understanding.” In one set of studies he conducted, people read about a made-up natural phenomenon, like glowing rocks. Some were told the phenomenon was well understood by experts, some were told it was mysterious, and some were told it was understood but classified. Then they rated their own understanding. Those in the first group gave higher ratings than the others, as if just the fact that it was possible for them to understand meant they already did.

People naturally tuck away more facts on a topic when they believe that their partner was not an expert on it. They wordlessly divide and conquer, each acting as the other’s external memory.

Treating others’ knowledge as your own isn’t as silly as it sounds. In 1987, the psychologist Daniel Wegner wrote about an aspect of collective cognition he called transactive memory, which basically means we all know stuff and also know who else knows other stuff. In one study, couples were tasked with remembering a set of facts, like “The Kaypro II is a personal computer.” He found that people naturally tucked away more facts on a topic when they believed that their partner was not an expert on it. They wordlessly divided and conquered, each acting as the other’s external memory.

Other researchers studying transactive memory asked groups of three to assemble a radio. Some trios had trained as a team to complete the task, while others comprised members who had trained individually. The trios who had trained as a team demonstrated greater transactive memory, including more specialization, coordination, and trust. In turn, they made fewer than half as many errors during assembly.

Each individual in those trios may not have known how to assemble a radio as well as those who had trained as individuals. But as a group—humans’ normal mode of operation—their epistemic dependence bred success.

Several lessons follow from seeing your own knowledge as contingent on others’. Perhaps the simplest is to realize that you almost certainly understand less about just about any subject than you think. So ask more questions, even dumb ones.

Acknowledging your epistemic dependence might even make debate more productive. In a 2013 paper, Sloman studied the role the illusion of explanatory depth plays in political polarization. Americans rated their understanding of, and support for, policies related to health care, taxation, and other hot-button issues. Then they tried to explain the policies. The more the exercise reduced their own sense of understanding, the less extreme their positions became. You can’t take a firm stand on shaky ground. No one understands Obamacare, Sloman said—not even Obama: “It’s too long. It’s too complicated. They just summarize it with a couple of slogans that miss 99.9% of it.”

Another lesson comes from Hardwig’s original paper on epistemic dependence. The seemingly obvious notion that rationality requires thinking for oneself, he wrote, is “a romantic ideal which is thoroughly unrealistic.” If we followed that ideal, he wrote, we would hold only relatively crude and uninformed beliefs that we had arrived at on our own. Instead of thinking for yourself, he suggested, try trusting experts—even more than you might do already.

I asked Sloman (an expert) if that was a good idea. “Yeah!” he said. “Florida. Do I have to say anything else?” (Florida’s covid-19 cases were skyrocketing at the time as people ignored experts’ advice on protective measures.) In reality, of course, rationality requires a balance between taking advice and thinking for yourself. Without at least scratching the surface of an issue, you’ll fall for anything.

To test the veracity of a fact, check whether experts agree on it. Gabriela González, a Louisiana State physicist and another former head of the LIGO Scientific Collaboration, said that as a diabetic, “I would never try to get the data of a clinical trial and analyze it myself.” She looks for medical consensus in news stories about potential treatments.

You can also have an independent expert review another expert’s claims. In science, this is the process of peer review. In daily life, it’s checking with your uncle who knows about cars, cooking, or whatever. Inside LIGO, committees review each stage of an experiment. They might ask independent experts to dig into code others have written, or just ask probing questions. Researchers analyzing the combined data use multiple algorithms in parallel, each written by different people. They also run frequent tests of the hardware and software.

Another audit, which we instinctively use in everyday life, is to see how people respond to questions about their expertise. “Dialectical superiority” is a cue that Alvin Goldman, a philosopher at Rutgers University, suggested using in a 2001 paper titled “Experts: Which ones should you trust?” He wrote that in a debate between two experts, the one who displays “comparative quickness and smoothness,” and has rebuttals at the ready, could be considered the one with thorough understanding of the issue. However, he points out the weakness of this cue. (Having all the answers is sometimes a bad sign, Sloman said: “I think an important cue is: Do they express sufficient humility? Do they admit what they don’t know?”)

Goldman’s paper offered four more cues as to whether an expert’s opinion is reliable. They are the approval of other experts; credentials or reputation; evidence about biases or conflicting interests; and track records. He acknowledged problems with all four but suggested that track records were most helpful. If these seem like ad hominem appraisals rather than evidence-based ones, Sloman says, that’s not a bad thing: “It strikes me as a lot easier to evaluate someone’s credibility than to acquire all the knowledge that that individual has. It’s orders of magnitude easier.” As for formal credentials, he said, “You can call me an elitist if you want, but I think having a degree from a reputable institution is a sign.”

Several lessons follow from seeing your own knowledge as contingent on the knowledge of others. The simplest is that you almost certainly understand less about almost any subject than you think.

Ultimately, knowledge is about both evidence and trust. Harry Collins, a sociologist at Cardiff University who has been writing about the gravitational wave community for decades, emphasizes how face-to-face interactions shape what we believe to be true. He recalls a Russian scientist who’d visited Glasgow to work with a team that couldn’t reproduce his results. Even though they didn’t succeed during his visit, they no longer doubted him, because of the way he worked in the lab. “For instance, he’d never go out for lunch,” Collins said. “He insisted on having a sandwich—when he’d come all the way from Russia and could be enjoying delicious Glaswegian curry.” They believed that no one so dedicated would be making up his findings, so they kept trying, and eventually they achieved similar results.

Epistemic dependence also highlights the importance of sharing your work in progress. Before interferometers, when physicists built gravitational wave detectors using vibrating aluminum bars, they protected their raw data and shared only lists of detections they thought they had made. Eventually they began to trust each other and work together more closely. If the physicists at LIGO and other detectors had stuck to the old ways, Giaime said, “we could have blown the discovery of the century”—a 2017 neutron star collision that, unlike the 2015 black hole collision, was also studied by radio, gamma-ray, x-ray, and visible-light telescopes. That was made possible only because LIGO and Virgo shared data, allowing them to quickly pinpoint where the collision took place. Without that cooperation, Giaime said, “we wouldn’t have known the sky position of the pair of neutron stars accurately enough to point telescopes at it soon enough.”

Of course, epistemic dependence also has its downsides. Consider the costs of turnover within organizations. If someone who’s a key part of your project leaves, you lose pieces of collective knowledge and capability that you can’t make up on your own.

As scientific collaboration has changed, so have scientific awards. “The Nobel Prize is an anachronism from an earlier age when things were done by an individual or a small number of people,” says Weiss, who shared the Nobel Prize in Physics in 2017 with Kip Thorne and Barry Barish for his work on gravitational waves. “I felt awkward on receiving it and was only able to justify it by saying I was a symbol for all of us.”

In Giaime’s office at the end of the tour, he pointed to a plaque on his wall. In 2016, the Special Breakthrough Prize in Fundamental Physics was awarded to the LIGO Scientific Collaboration. A million dollars went to Weiss, Thorne, and another founder, Ronald Drever, and $2 million was split equally among a thousand other people. “It’s a memento to a kind of a new era of science,” Giaime said, “where large groups can get prizes together.”

The awards are catching up to how science operates today. Researchers have always depended on each other to fill in gaps in knowledge, but specialization and collaboration have grown more extreme, integrating global networks of domain experts. The LIGO Scientific Collaboration involves hundreds of people, many of whom have never met. They use tools and knowledge contributed by thousands of others, who in turn rely on the tools and knowledge of millions of others. Such organization doesn’t happen by chance: it requires sophisticated technical and social systems, working hand in hand. Trust feeds evidence feeds trust, and so on. The same holds true for society at large. If we undermine our self-reinforcing systems of evidence and trust, our ability to know anything and do anything will break down.

And perhaps there’s a broader, even philosophical, lesson: You know much less than you think you do, and also much more. Knowledge can’t be divided at the seams between people. Maybe you can’t define photosynthesis, but you’re an integral part of an epistemic ecosystem that can not only define it but examine it at the smallest scales, and manipulate it for the benefit of all. In the end, what do you know? You know what we know.

from MIT Technology Review https://ift.tt/3m8Rtcs

No comments